BI’s Impact on the Data Center

Business Intelligence (BI) has earned its place in nearly every organization. While its value to decision making is clear, its impact on the data center is often overlooked.

Myth: BI will help us make more informed business decisions.

Myth: BI will help us make more informed business decisions.

Reality: BI will help us make more informed business decisions, when we systematically provide it with high quality data.

The second part of that statement is incredibly important, because every organization has a unique set of data sources – database tables, unstructured data, formulas, coefficients, etc. The value you receive from a BI tool is directly proportional to your ability to feed it with data at the appropriate time. In a demo, BI always looks amazing, because the stock data sets load like clockwork and generate vivid results. In production, however, the quality of the resulting information often varies, as IT teams figure out the most efficient way to provide BI with data. Not unlike a power plant’s dependence on the quality of its feedstock, BI tools depend heavily on the data center’s supply of data.

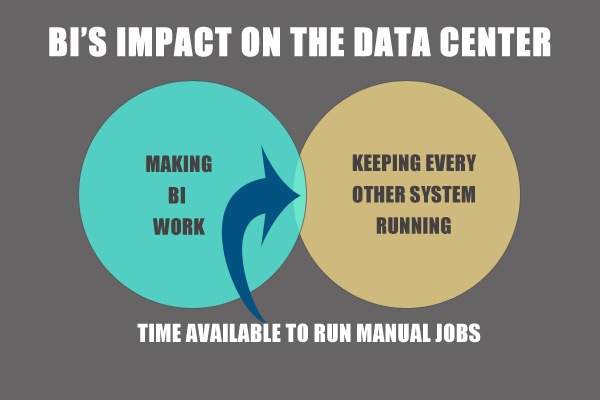

When integrating BI, you are not only dealing with larger and more numerous data sets, you’re also processing those data sets on a regular, repeated basis. The speed and reliability with which BI gets processed is ultimately in the hands of data center managers.

Applying Automation to BI

From an automation perspective, we look at BI from 4 perspectives:

- Scale

- Velocity

- Auditability

- Extensibility

A critical examination of BI from these perspectives helps determine whether native tools and current staff are sufficient to keep BI running, or whether a more centralized solution is needed to manage the growing processes feeding into BI.

Scale

Business users often underestimate the resources needed to move data. Well prepared data center managers can enumerate the size and location of each and every data source required for a set of BI process. And, in cases where “big data” needs to be moved to a new location for processing, they are aware of the time and bandwidth required. It can be enticing to see the impact of 3 years of historical purchase data on a BI decision, but those analyses are difficult to achieve if they are dependent on the successful transfer of a 20 Tb dump of unstructured data from one data center to another.

Velocity

Expect a mix of ETL processes, starting/stopping of virtual machines and making real-time connections each time you want to run a BI process. Developing and maintaining a predictable, fail-safe schedule of loading processes ensures that IT administrators aren’t called upon to perform manual processes.

BI often promises to provide real-time decision making. However, real-time data is not always an option. Unnecessarily shouldering a data center with establishing real-time data connections as a default is shortsighted, when comparable ETL workflows can be defined, monitored and audited with great precision.

Now, there are instances when real-time data connections should be considered. Arbitrage trading strategies, where a delay could mean the difference between profiting a $1M or profiting $10, might be better served by real-time data.

Auditability

With BI tools accessing proprietary data and consuming considerable amounts of bandwidth, organizations need a comprehensive audit trail that explains who initiated various workflows and how efficiently they were executed. No matter how critical a BI decision is, its execution should not add new risks or latency to established business processes.

Extensibility

Data center managers have enough on their plate already. While it may be tempting to work around security settings so that business users can get BI jobs done, it’s unacceptable by enterprise standards. At some point, the ability to run scripts, queries, complex ETL processes, FTP transfers and more – all of the general processes that precede BI processing – needs to extend beyond the data center. Giving users appropriate rights to do so (i.e. not passing around root/admin level passwords!) ensures that IT admins don’t become batch processors, awaiting the commands of business users.

Data Center Managers Have a Lot to Add

Because they constantly work with limited resources (CPU, storage, time), IT pros are in a unique position to contribute to Business Intelligence planning. They understand the dependencies and security required to actually load all that source data into a new BI application. For all but a few companies, a “sky is the limit” approach to BI is unsustainable. As was the case with ERP and CRM, BI solutions take time to test, customize and adapt to an organization’s unique business processes. Only then do they produce good, actionable information.

Whether you plan to work with SAP Business Objects, Cognos, Oracle, Informatica, Microstrategy, Tableau or other applications, consider these key questions:

- What data sources does my BI application need?

- Which data sources can be directly connected to our BI application and which ones should leverage smart ETL processing?

- How do I audit the users and applications involved in our BI workflow?

- How do I maintain enterprise security, without sticking IT admins with all the ETL work?